Conversations on it :

Let's build a mini-ChatGPT that's powered by DeepSeek-R1 (100% local):

Why do we need to run it locally when we can always run it from deepseek site?

Privacy mainly, You can run it from the site if you want but this is for companies or tech departments that want to run it locally and not worry about what data / info could be leaked

Okay, but why build your own front end when open webUI exists? I can build an identical local solution with 2 commands (ollama pull, docker run).

A company may want to incorporate it into their own site for specific purpose to incorporate their branding and feel

Various reasons, but yes if I was just messing with it I would just do what you mentioned

sunels@sunels:~$ docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

Unable to find image 'ghcr.io/open-webui/open-webui:ollama' locally

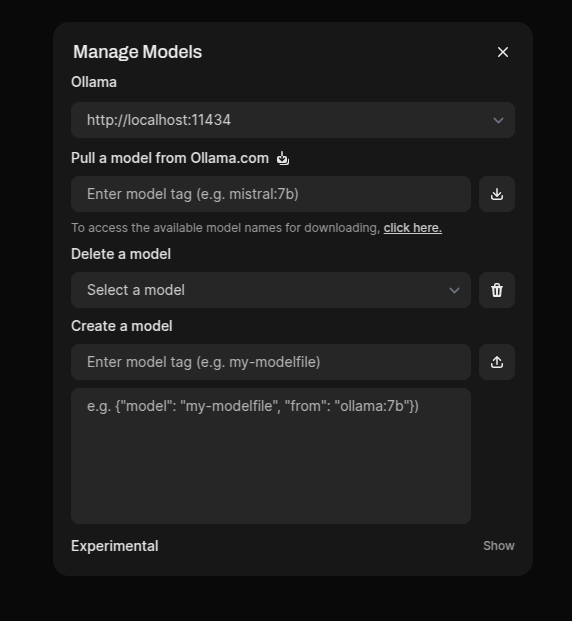

http://localhost:11434/

No comments:

Post a Comment